Companies that deal with significant transactions on the internet, analytics for users, and other related activities handle data from different sources. To manage this, you must know what data ingestion means and how to utilize it. Data Ingestion is an essential starting point for all daily processes, ranging from analytics to effectively managed data storage. However, the complex and diverse formats and data sources companies encounter in the processing process make it challenging.

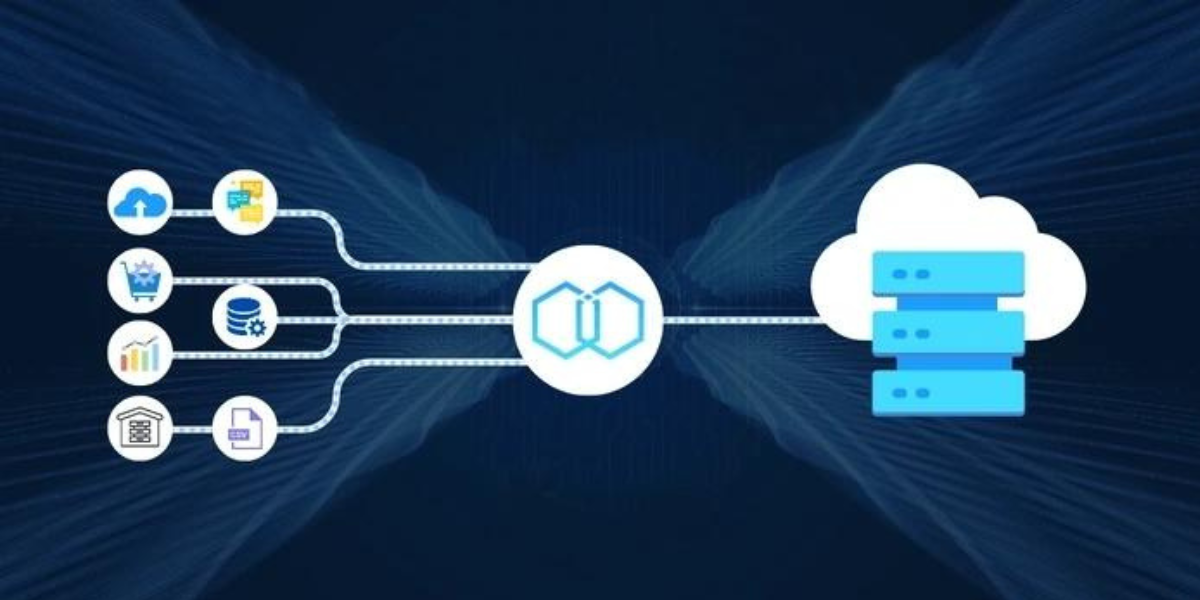

The data comes from structured databases, unstructured documents, APIs, IoT devices, social media platforms, and so on. Organizations must work hard to integrate all of these data sources to allow for analysis. Data ingestion refers to the process of transferring information from a variety of sources to the desired website for further processing and study. Data can come from many sources, such as data lakes, IoT devices, on-premises databases, and SaaS apps. It can be reused in different locations, like cloud data warehouses and marts.

Data ingestion is an important technology that aids organizations in making sense of the ever-growing volume and variety of data. We’ll dig deep into the technology so businesses can benefit more from the data they consume. We’ll explore the various types of data ingestion, the process, , and the tools to help with data ingestion.

This informative article is sure to assist you in discovering the world of data ingestion. Its application in various industries, and the tools to assist in moving data efficiently and keeping all necessary information in the dust.

When you finish this book, you’ll be able to comprehend the definition of data ingestion, the different kinds, and the best way to choose the best software for your organization.

What Is Data Ingestion?

Data Ingestion may be described as transferring data from one or several sources to a destination site used for query, analysis, or storage. Data sources could include IoT devices and databases, data lakes in-house databases, SaaS applications, and different platforms with significant information. These sources are the data that can be incorporated into platforms like a data warehouse or a data mart. The simple procedure of data ingestion collects information from where it originated. Then, it cleans the data and sends it to a location that an organization can read, utilize, analyze, and use.

The layer of Data Ingestion Framework is the foundation of any analytical structure. The downstream reporting and analytical systems depend heavily on consistency and easily accessible information. There are many ways to insert data into your warehouse or the data mart. Selecting the one that will best fit your requirements. It depends on your design needs and the particular requirements of your business.

Why Does Data Ingestion Matters?

In the beginning, as the start of any data pipeline, the data ingestion process is crucial. This is the primary reason that.

The Foundation Is Set For Crucial Operational Data

After data has been ingested, it may be cleansed and processed. It can also be deduplicated, cleaned, and propagated depending on the demands of the specific data processing process. These actions are essential for efficient data storage and warehousing, analytics, or application usage. A reliable tool for data ingestion can be set up to prioritize the intake of data from critical sources to help these data sources process the information effectively.

Facilitating Data Integration

In addition, data ingestion initiates an integration process of bringing data from multiple sources and then converting it into the same format when needed to present it from an all-encompassing perspective. The integration of data into a single system that can be utilized across all departments helps in preventing the creation of data silos, which remain a prevalent issue.

The Process Of Streamlining Engineering Data

The majority of aspects of modern-day data processing are fully automated. Once the system is set, the multi-step process can be run without human involvement. Unlike earlier, which required data scientists to manipulate data manually. Automatization allows them to tackle the most pressing tasks and speeds up the entire data engineering process.

Enhancing Analytics And Making Better Decisions

To ensure that any data analytics project achieves success, it is essential that the data is always and easily accessible to analysts. The layer that handles data ingestion directs the data to whatever data storage method is best for access on demand, be the data warehouse or a more specific destination like a data warehouse. Additionally, ingesting information in the way most suitable to particular types of analysis to specific analyses. For example, batch processing to facilitate daily expense reports or real-time ingestion of vehicles’ ADAS information – is crucial to ensuring the effectiveness of analysis.

Data ingestion through a single platform ensures that everyone in the business can access and analyze quality data, which is crucial for making the right corporate decisions. Real-time data ingestion speed is a significant advantage as an analytical foundation that can provide more valuable data and improved decision-making.

Fundamental Concepts Of Data Ingestion

Let’s discuss the fundamental concepts to establish the basis for effectively managing data.

Data Sources

Data ingestion isn’t possible without data sources. They are the places you can get your information – databases, APIs, files, or even web scraping data from the websites you love. The more varied your data sources are, the more varied they are likely to be. All it takes is figuring out the bigger view.

Data Formats

Data can come in various forms and sizes, and you must be prepared for each. There is organized data (think CSV, JSON) as well as semi-structured data (like XML) and unstructured data (text or pictures, etc.). Understanding the formats of your data is crucial to processing it efficiently when you are processing.

Data Transformation

You’ve collected lots of information from various sources, but they’re all a mess and must be more consistent. You’ll need to transform it. This is where transforming data can help . Cleaning filters, aggregation, and cleansing so that your data looks its best and matches the specifications of the system you’re trying to use.

Data Storage

The ingestion process will be placed in an appropriate storage space when your data is finished. This is usually a database or a data warehouse in which it’s stored for study and processing. Selecting the best storage option ensures that your information is kept in order, readily accessible, and protected.

Advantages Of Data Ingestion

Let’s have a look at the benefits of data ingestion for businesses:

- Adequate data ingestion offers a variety of business advantages, which include:

- Access to data across the entire enterprise, including various departments and functional fields, with diverse demands on data.

- It is an easy method of cleaning and importing data from hundreds of sources, including many types and schemas.

- Ability to process large volumes of information at a high rate, with real-time batch processing, and also clean and include timestamps in the ingestion process.

- They have decreased costs and time-savings over manual data aggregation processes–especially if the solution is an as-a-service model.

- Data ingestion in real time allows companies to spot problems and opportunities quickly and make informed choices.

- A way for even small companies to gather and analyze more significant amounts of data and efficiently manage spikes in data.

- Cloud-based storage can store large quantities of data in their raw form, allowing easy access whenever needed.

- Engineers may use data ingestion technology to ensure that the software and apps can move data swiftly and provide customers with the best experience.

Data Types That Are Ingested

Some companies need real-time data ingestion for IoT devices 24/7. Some companies require regular data updates every twelve hours to operate their businesses effectively. It’s simple to believe that your company always requires access to all information flows from all sources. The reality, however, will likely be more complex than this. The value of big data for your business intelligence is more than just the ability to integrate real-time data with each data lake, data warehouse, ETL platform, and data source. This is likely too much data that could be more useful to everyone and will cost a lot of money to set up. The goal is to create automated data workflows tailored to deliver the correct information in the right location on the appropriate date.

For example, the marketing department might require event-driven data ingestion to satisfy specific requirements, like when a product gets added to an online shopping cart. The merchandising department can ingest data each Monday at 8:00 AM. This is a straightforward process to automate. In either case, the data engineer must determine the best method to consume data.

Every type of data ingestion comes with its strengths, drawbacks, and weaknesses. However, most DataOps team members are likely to want to employ a range of ensuring that the data processing process is perfect as an option to enhance DataOps within your business.

This is a brief overview of different kinds of data intake.

Event-Driven Data Ingestion

Carts with added items, subscribed emails, and specific routes to navigate. There are many ways to automate data intake according to particular events. Instead of streaming live data directly from your site, collecting data from customers through an API for the specific application and conserving funds is possible.

The business’s operations may also involve crucial events that aren’t dependent upon customer interactions, like product shipping arriving, shipping, or receiving. Utilizing event-based data intake is an excellent option to access specific data often without needing colossal storage.

Real-Time Data Ingestion

IoT businesses, autonomous vehicles, and AI-based services need real-time workflows for data intake to be efficient and ensure that customers are safe. If you’re dealing with thousands of emails or API calls every hour and must know exactly what’s happening, live-time data ingestion can help you.

It’s an expensive method to implement; however, it’s worth the cost of the best products and services. If, for instance, you’re developing a conversational AI based on Google’s LaMBDA 2 language model (when it’s accessible), it will require an ongoing data workflow to ensure the dialogue is as natural as possible. Another common usage is streaming data via cloud-based AWS or Azure applications. The cloud storage or data lakes are directly linked to the data analytics platform you use to run advertising campaigns.

Batch-Based Data Ingestion

Data ingestion using batch-based processes is precisely how it’s described- a method to ingest data in groups. Rather than streaming live data to the destination, batch-based ingestion handles all the data you have in predetermined or scheduled batches.

The benefit? Ingestion via batch typically decreases the time needed to process information and the overall storage requirements for most users. Since records are ingested together, it is less necessary to store them separately. Some companies are convinced they require immediate ingestion but don’t need live, instant data streams to achieve their targets. Batch processing makes managing large volumes of incoming data simple without overloading systems. This can yield cost savings and improved efficiency in the organization’s activities.

Combined (Lambda) Data Ingestion

Combination (Lambda) Data ingestion blends batches-based, real-time, and event-based approaches to data ingest. This ensures that you have every piece of data you require and, when you want it, in the location you’d like to want it, instead of dealing with excessive data, not enough data, or data that is arriving at the wrong time.

Suppose you’re creating a user-facing service or product built on top of a variety of sources of data (including Azure and AWS data warehouses). In that case, knowing precisely which data ingestion technique best suits your specific needs is crucial. You’re likely to use the combination of data ingestion, which will meet all needs.

What Are Data Ingestion Tools?

Tools for data ingestion can be the lifeblood of a company. They collect and move data that is structured, semi-structured, and non-structured information from the source to locations. They can automate tedious manual data ingestion procedures, meaning organizations save time shifting data and spend longer using it for better business decision-making.

The data is transferred through the data ingestion pipeline, an array of processes that transfer the data from one location to another. It could begin with a database or another source of raw data. Then, go through an ETL instrument that cleans and formats it before moving the data to a report software or data warehouse for analysis. Accessing data efficiently and quickly is vital for any company competing in today’s digital world. Tools for data ingesting have various features and abilities. For you to select the right tool that meets your requirements, you’ll have to think about multiple factors before making a decision:

- Does data arrive as semi-structured, structured, or unstructured?

- Should data be processed and ingested rapidly or in batches?

- What amount of data must a tool for ingestion handle?

- Is there any sensitive information that needs to be hidden or secured?

Data Ingestion Tools may be employed in various methods. In one instance, they could shift millions of records into Salesforce daily. They can also ensure that multiple apps share data regularly. Ingestion tools may also transfer information about marketing to an enterprise intelligence platform for analysis.

How Important Is It To Ingest Data?

A majority of businesses have vast quantities of raw data. However, they need to figure out how to use it. The data for all the activities on your site is available in real-time. That’s great, but how does the data enter your analytics software? Data ingestion is the process that shifts raw data from one crucial source to an area you can use. The company you work for might need greater business intelligence to ensure that it can make better decisions or forecast budgets for next year. There may be a need to shift Terabytes of IoT information into a warehouse to provide what appears to be a straightforward client experience in the front. Data ingestion can make all these possibilities feasible.

Data Ingestion is only one aspect of DataOps, a collaborative and systematic approach to managing data. When you’re working as a data engineer, your responsibility is to ensure that your data collection is well-organized, shared, safe, and valuable. But getting there takes work. The process of data ingest, and data ingestion tools help users start. Also to manage the progress of data workflows, and share them with others.

Challenges In Data Ingestion

Once you’re conscious of how data could be infused into an object, here is a listing of issues businesses often confront when consuming data.

Maintaining Data Quality

When ingesting data from all sources, maintaining data quality and accuracy is the main challenge. Data analytics transactions must be performed on the data. Since data ingested does not serve as BI on an ongoing basis, problems with data quality often need to be discovered. It is possible to reduce this by using a tool for data ingestion with additional quality functions.

Data Synchronization From Many Sources

The data is accessible in a variety of formats inside the organization. When the company grows, it will also see more data piling up, making it difficult to keep track of. It would help if you synced or integrated all this data into one warehouse, which is the best solution. However, since the data can be obtained from various sources, it may take time and effort. The solution is programs for data ingestion. It provides different interfaces to collect the data, transform it, and then load the data.

In Creating a Consistent Structure

To make the business intelligence capabilities perform correctly, you’ll require a consistent layout employing data mapping functions to organize details. The tools for data ingesting will cleanse, transform, and then map data points to the proper location.

Final Thoughts

Data ingestion is a crucial technology that allows companies to extract and transmit information in an automated way. Once the Data Ingestion Pipeline has been in place, IT and other business team members can focus on getting information from the data they collect and discovering fresh insights. In addition, automated data ingestion may be a significant competitive advantage in the current highly competitive markets.

The ability to ingest data is a crucial aspect of the development of modern companies due to the abundance of information. Everything needs to be processed efficiently and safely before being organized and stored. After being stored, the information is utilized by software that helps businesses maintain an advantage in the market. You must know the steps to set up data ingestion to assist the organization in increasing its advantage over its competitors.